Design

Prior Work

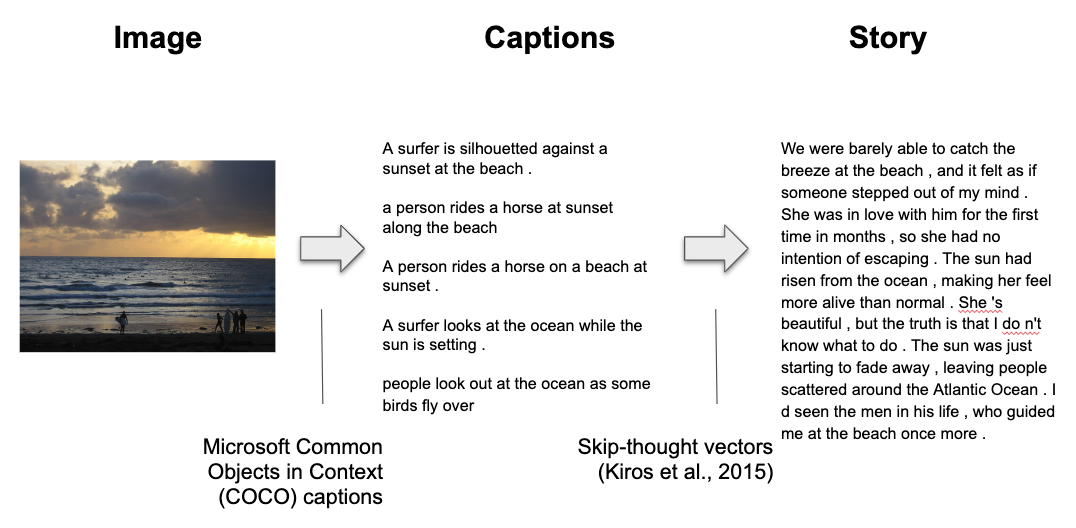

We’re building on the work by Kiros et al. who have created a neural storyteller trained on romance novels. The neural storyteller is essentially a recurrent neural network decoder for the input text and then parallel to this trains on COCO image/caption embeddings.

Modifications

We modified two elements of the existing model from Kiros et al. First, we tried several different versions of the model’s tuning parameters. Second, we trained the model on two different genre corpora.

- Tuning Parameters: the existing neural storyteller allows for the tuning of the number of captions to condition on and beam search width.

- Genre corpora: We created two corpora of public domain books, one each for science fiction novels and biographies. We trained the model on each corpus in turn. Then, for each model, we generated stories using images characteristic of scenes from romance, science fiction, and biographies.

Text Processing

The input text that we used to train our own sentence encoder-decoder was scraped from Project Gutenberg. We selected books that fit the following genres:

- Biography

- Science Fiction

Which resulted in 3 sets of 6 sentences per book.

Sample Text

Biography

There was a salt-marsh that bounded part of the mill-pond, on the edge of which, at high water, we used to stand to fish for minnows. By much trampling, we had made it a mere quagmire. My proposal was to build a wharff there fit for us to stand upon…(Benjamin Franklin's autobiography)

Science Fiction

"What's the matter, Gray? Trying to start something?" "Suppose I were?" asked Gray silkily. Dio was the unofficial leader of the convict-veterans. There was about his thin body and hatchet face some of the grim determination that had made the Martians cling to their dying world and bring life to it again. (Leigh Brackett, “A World is Born”)